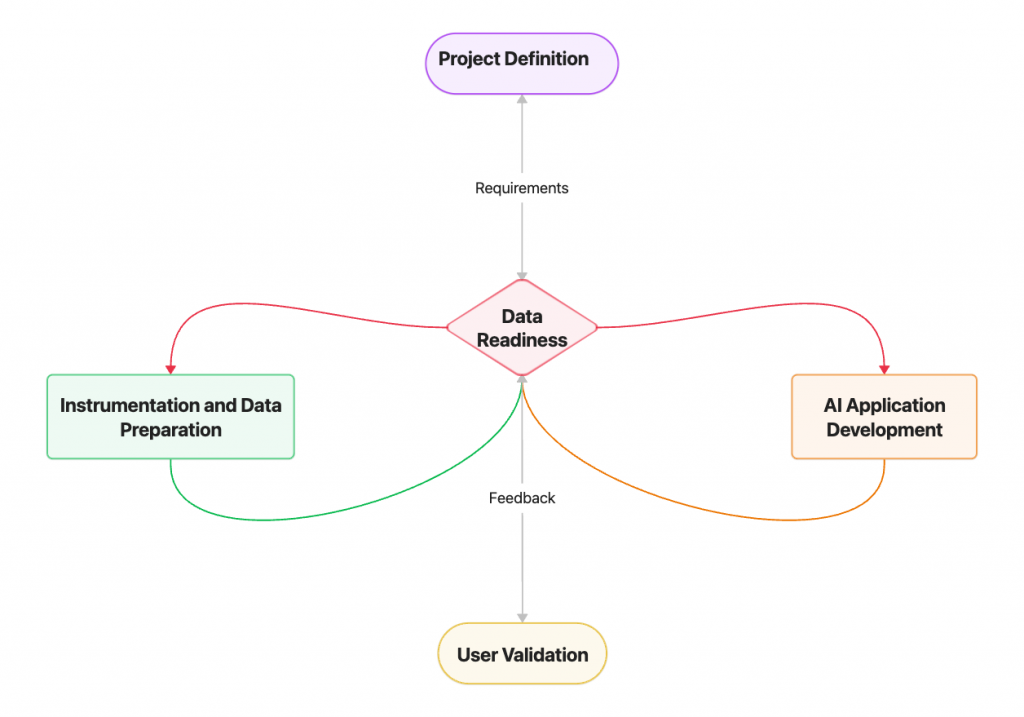

It’s widely recognized that data preparation is critical for AI projects. But where does it actually fit in the development process? In my previous post, AI Application Development – Building with Context Intelligence, we explored how injecting relevant context supports different technical patterns. Here we’ll drill deeper into data preparation and its relationship with other activities in the AI Application Journey.

With initial project definitions complete [Project Definition – Establishing the Foundation](https://www.linkedin.com/pulse/context-intelligence-project-definition-foundation-dan-wolfson-g2qkc), and development strategies established, the project team now have:

- Scoped-Resource Lists identifying which data is suitable for the project

- Query Routing showing how and where to use this data

- RAG Contextualization defining the contextual retrieval approaches used by the RAG processing

They now need this data deployed in a place it can be used. For example, our sales forecasting advisor application needs actual sales data in the RAG systems, the AI models need prepared training sets and the MCP tools need to be configured with the location of the data services to call.

Data Preparation delivers data through executable, and hopefully automatable processes. It involves provisioning, analyzing, transforming, loading, validating and reporting on the data – providing the ingredients for our components to cook with.

Context is both used and produced throughout data preparation.

- It is used in selecting appropriate data sources and understanding quality characteristics.

- It is also produced by instrumenting the execution of data pipelines and quality analyses.

Context Intelligence provides tracking and history of:

- experiments,

- analyses,

- transformations

It helps to enhance our understanding of what’s working (or not). Tools such as MLFlow annotate and track many of these processes, and Context Intelligence systems like Egeria can integrate this instrumentation data to build a comprehensive picture.

5 Key Considerations

Data preparation for AI requires balancing multiple concerns, each with implications for how the AI Application will perform. Here are five aspects to consider:

1. Scope & Representativeness – Ensuring the data covers all business scenarios without bias. Context Intelligence helps here through Data Lenses that define scoped dimensions (e.g. temporal, geospatial, and organizational) of interest, and Data Scope classifications that characterize resources with dimensional information. Resources with classified scopes that fit in the boundaries of a Data Lens definition are readily visible. Egeria provides the mechanisms to classify resources with data scopes and filter them through a Data Lens.

2. Security, Privacy & Compliance – Conforming to governance requirements, licensing, and regulatory constraints. Context Intelligence connects policies to the data they govern. When preparing data, teams see not just which assets exist within scope, but which policies apply—GDPR requirements for European data, licensing restrictions for training data, access controls for sensitive forecasts. Policies flow from Context Intelligence into preparation pipelines, ensuring compliance by design rather than afterthought.

3. Quality & Trustworthiness – Data is of the appropriate granularity, normalized for consistent values and structure over time. Context Intelligence maintains connections (lineage) between prepared data and source systems, enabling teams to document quality issues with business context. Users can determine not just “null values detected” but “missing regional attribution affects EU forecasts for Finance users.” This creates escalation paths to data owners and tracks quality metrics over time, linking technical problems to business impact.

4. Relevance & Understandability – Data addresses business problems and provides users with clear provenance and context. Context Intelligence ensures the metadata required for filtering and re-ranking flows through preparation pipelines. When documents are chunked and embedded for RAG indices, Context Intelligence provides the business context metadata—region, product line, regulatory environment, content authority—that makes retrieval context-aware. When MCP tools are configured, Context Intelligence supplies capabilities, business characteristics, scope, intent, and governance requirements.

5. Performance & Cost – Balancing performance, quality, and cost is essential for scaling from experiments to production systems. Context Intelligence is about linking and integrating multiple tools that observe different metrics and points of view—performance, cost, hallucinations, usage, relevance—all of these different kinds of metrics need to be woven together to achieve a balance between operational efficiency and business value.

These aspects provide some useful perspectives in the design and implementation of the Data Preparation processes. However, we need to recognize that evolving an AI Application from an interesting experiment, through pilots, betas, and production phases is fundamentally an iterative and collaborative process, which will be the starting point for our next post.